Welcome to your guide into the world of multimodal pipelines, an increasingly vital topic in the realm of artificial intelligence (AI) and large language models. In this quick overview guide, we will navigate through the intricate layers of multimodal technology, bringing you a blend of technical details and practical insights.

Imagine you’re building a robot that needs to understand the world around it. If you give the robot only a microphone, it can hear sounds but can’t see anything. If you give it only a camera, it can see but can’t hear. Each of these is a “mode” of understanding the world—audio is one mode, and visual is another.

A multimodal pipeline is like giving your robot a microphone, a camera, and maybe even more sensors, all working together. This way, the robot can both see and hear, making it much better at understanding what’s happening around it. It can recognize a person talking and also listen to what they’re saying, for example.

What are multimodal pipelines

So, in the tech world, a multimodal pipeline is a system that combines different types of data—like text, images, and sound—to make smarter decisions or provide more helpful responses. It’s like upgrading your robot to be a super-robot that’s way better at its job because it has more ways to understand things.

Other articles you may find of interest on the subject of AI models :

- How to build custom AI models from prompts using Prompt2model

- Talk with multiple AI language models simultaneously – GodMode

- Different OpenAI models and capabilities explained

- IBM Granite Foundation AI models unveiled

- Platypus 2 70B AI open source LLM tops the leaderboard

In case you’re curious how “multimodal” got its name, it’s quite straightforward. The term originates from the ability of these systems to process multiple types of data, be it text, images, audio, or even video. The blend of these data types allows for more robust and comprehensive models.

- Data Fusion: Multimodal systems are adept at combining different types of data into a unified model.

- Contextual Understanding: By analyzing multiple data streams, these systems grasp the context much better than their unimodal counterparts.

- Enhanced Performance: The incorporation of various data types often results in better predictive accuracy and reliability.

You’ll be pleased to know that multimodal pipelines have found their way into a plethora of applications:

- Healthcare: Think diagnostic tools that analyze both text-based medical records and X-ray images.

- Entertainment: Imagine video game characters that respond not just to keystrokes but also to your voice and facial expressions.

- E-commerce: Systems that recommend products based on text reviews, user behavior, and image analysis are already a reality.

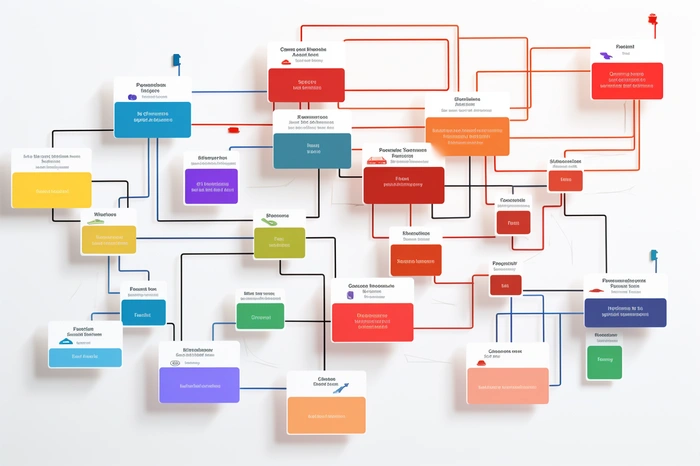

Components of a multimodal system

These points provide a simple guide to the technicalities behind multimodal systems. At its core, a multimodal system consists of several key components:

- Data Ingestion: The first step involves collecting various types of data.

- Data Preprocessing: Each data type is then preprocessed according to its unique requirements.

- Feature Extraction: Crucial features from each data type are isolated for further processing.

- Model Training: Algorithms are trained on the extracted features, often using techniques like neural networks.

- Inference: Finally, the model makes predictions or takes actions based on new, unseen data.

To enhance your experience, let’s delve into a real-world example: OpenAI, with its GPT (Generative Pre-trained Transformer) models, has been a forerunner in the language modeling space. Although the GPT series started as text-based models, OpenAI has also ventured into the multimodal realm. Its DALL-E model is a testament to the power of multimodal systems, capable of generating images from text descriptions.

While multimodal systems are incredibly promising, they are not without their challenges:

- Computational Overhead: These systems require significant computational resources.

- Data Privacy: With multiple data types at play, ensuring user privacy becomes more complex.

- Interpretable Models: Making sense of how multimodal systems arrive at conclusions can be a daunting task.

However, the future looks bright as researchers are actively working on mitigating these issues. Advanced hardware and more efficient algorithms are continually being developed, paving the way for more effective and accessible multimodal systems.

Multimodal pipelines offer a synergistic approach to handling multiple types of data, which makes them versatile and powerful. From healthcare to entertainment, their applications are vast and growing. So, there you have it—a quick overview guide to understanding multimodal pipelines in the realm of artificial intelligence and large language models. Whether you’re a seasoned tech enthusiast or new to the game, we hope this article has enriched your understanding and piqued your curiosity.

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.