Apify is a versatile platform designed to transform web resources into actionable data through web scraping and automation. Whether you aim to gather insights for sales, marketing, or operational efficiency, Apify provides scalable and cost-effective solutions. This guide by Nick Saraev provides more insight into its core features, practical applications, and advanced functionalities, allowing you to harness the full potential of this powerful tool.

Have you ever found yourself drowning in repetitive tasks or struggling to gather the data you need to make informed decisions? Whether it’s scraping job listings, collecting customer reviews, or automating tedious workflows, these challenges can feel overwhelming, especially if you’re not a coding expert. Apify is designed to make web scraping and automation accessible to everyone, Apify opens the door to a world of possibilities, whether you’re a beginner or a seasoned developer. Whether you’re looking to streamline your business processes, gather data for research, or even create a new revenue stream, Apify has something for you.

Understanding Apify’s Core Components

TL;DR Key Takeaways :

- Apify is a versatile platform for scraping data from the web and automation, built around core components like actors, tasks, and data sets, allowing efficient data extraction and workflow automation.

- It offers pre-built scrapers for platforms like Instagram, Twitter, and Google Maps, simplifying data collection without requiring coding skills.

- Apify integrates with no-code tools like Make.com and Zapier, making automation accessible to non-programmers and streamlining repetitive tasks.

- Advanced features such as scheduling, storage, and proxies enhance performance and scalability, catering to both small and enterprise-level projects.

- Users can monetize custom scrapers by publishing them on the Apify Store, turning technical expertise into a sustainable income stream.

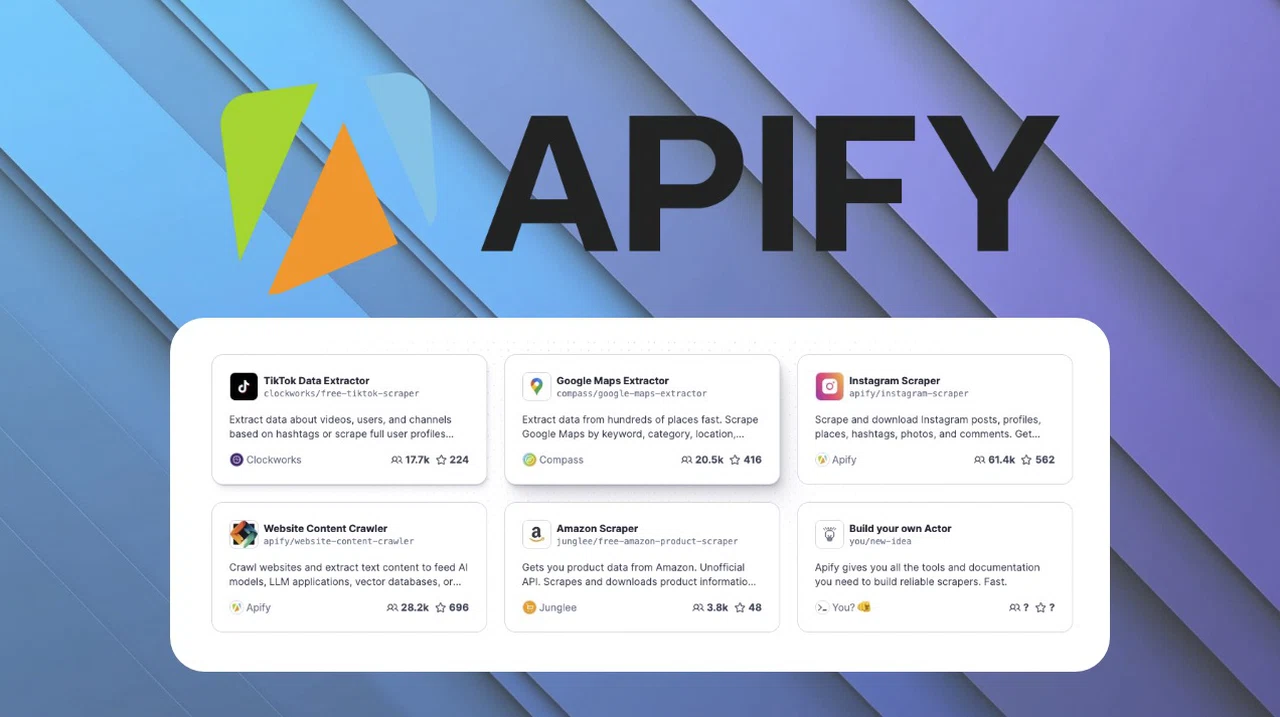

Apify’s functionality is built on three essential components: actors, tasks, and data sets. These elements form the backbone of its web scraping and automation capabilities, working together to streamline data extraction and process automation.

- Actors: These are serverless programs designed to perform specific tasks, such as scraping websites or automating browser actions. Fully customizable, actors can handle even the most complex workflows, making them a cornerstone of Apify’s flexibility.

- Tasks: Tasks represent individual executions of actors, configured to meet specific requirements. This allows you to tailor each run to your unique needs, making sure precision and efficiency.

- Data Sets: Scraped data is organized into structured formats like JSON, CSV, or Excel, making it easy to integrate with your existing workflows or analytical tools.

These components work seamlessly to help you extract valuable data, automate repetitive processes, and integrate with other tools for enhanced productivity.

Using Pre-Built Scrapers

Apify offers a library of pre-built scrapers for popular platforms such as Instagram, Twitter, Google Maps, and Indeed. These tools simplify the data extraction process by allowing you to configure input parameters and filters without requiring programming knowledge.

For example:

- Scrape restaurant reviews from Google Maps to analyze customer feedback and identify trends.

- Extract job listings from Indeed to monitor hiring trends or discover new opportunities.

The data is delivered in a structured format, ready for immediate use. By using these pre-built scrapers, you save time and effort while making sure accuracy and consistency in your data collection.

Beginner’s Guide to Apify

Here are more detailed guides and articles that you may find helpful on automation workflows.

- New Universal AI Scraper Update Makes Data Collection Effortless

- How to build an autonomous AI research agent running 24/7

- Build an AI Agent That Scrapes ANYTHING (No-Code)

- Become an Excel Power Query pro with this complete guide

- Efficient Web Automation : Skyvern’s Advanced Open Source AI

- How to use ChatGPT Structured Output

- What is Anthropic’s Model Context Protocol (MCP) & Why It Matters

- Build an AI Powered Google Scraper for Automated Data Retrieval

- OpenAI AI Agent 2.0 being developed to control your computer

- How CrewAI’s AI Agents Enhance Content Creation

Integrating with No-Code Tools

For users without programming expertise, Apify integrates seamlessly with no-code platforms like Make.com and Zapier. These integrations enable you to automate workflows with minimal effort, making automation accessible to a broader audience.

For instance:

- Scrape restaurant data and use it to send personalized marketing emails through an email automation tool.

- Extract job postings and automatically generate tailored resumes using AI-powered tools.

By connecting Apify to no-code platforms, you can streamline repetitive tasks, reduce manual effort, and focus on higher-value activities, making automation a practical solution for everyone.

Creating Custom Scrapers

For more complex or unique requirements, Apify allows you to build custom scrapers using its integrated development environment (IDE). With libraries like Cheerio and Puppeteer, you can create advanced tools for web scraping and browser automation tailored to your specific needs.

Additionally, AI-powered tools such as ChatGPT can assist in writing and debugging code, simplifying the development process. Custom scrapers provide the flexibility to address specialized challenges, making sure you can extract the exact data required for your projects.

Advanced Features for Optimized Performance

Apify includes advanced features designed to enhance performance, scalability, and reliability, making it suitable for both small-scale projects and enterprise-level applications.

- Scheduling: Automate actor runs on a recurring basis to ensure your data remains up-to-date without manual intervention.

- Storage: Efficiently organize and retrieve data using data sets, key-value stores, and request queues, allowing seamless integration with other systems.

- Proxies: Access restricted content and bypass anti-scraping protections using data center, residential, and specialized proxies for enhanced reliability.

These features ensure that Apify can handle large-scale or complex tasks with ease, providing a robust solution for diverse use cases.

Monetizing Your Scrapers

Apify offers opportunities for monetization by allowing users to publish custom scrapers to the Apify Store. Through models such as pay-per-result or subscription-based rentals, you can generate revenue from your technical expertise.

Successful examples include scrapers tailored for niche markets like real estate, e-commerce, or social media analytics. These tools have demonstrated significant earning potential, allowing developers to turn their skills into a sustainable income stream. Monetizing your scrapers not only provides financial benefits but also allows you to contribute valuable tools to the Apify community.

Learning and Overcoming Challenges

To support users in achieving success, Apify provides extensive resources, including detailed documentation and the Web Scraping Academy. These materials cover a wide range of topics, from beginner-friendly guides to advanced tutorials on overcoming anti-scraping protections and integrating APIs.

By using these resources, you can deepen your understanding of web scraping and browser automation, equipping yourself to tackle challenges effectively. Whether you are a novice or an experienced developer, these tools ensure you have the knowledge needed to maximize Apify’s potential.

Maximizing the Potential of Apify

Apify enables individuals and businesses to extract actionable data, automate workflows, and build scalable systems tailored to their needs. Its combination of pre-built tools, custom development options, and advanced features makes it a valuable resource for a wide range of applications.

Additionally, the platform’s monetization opportunities enable users to transform their expertise into a profitable venture. By using Apify’s capabilities, you can drive efficiency, innovation, and growth in your projects, unlocking the full potential of web scraping and automation.

Media Credit: Nick Saraev

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.