Google has this week made available a new feature called Grounding which allows you to improve the results you can obtain from its Gemini AI. We’ve all been there in the middle of a conversation with an AI, and suddenly, it starts spewing out information that seems a bit off or outdated. Frustrating, right?

This is a common issue known as “hallucinations” in the world of large language models (LLMs), where the AI generates responses that aren’t quite accurate. It’s like asking a friend for advice, only to realize they’re relying on old news. Gemini Grounding has been specifically designed ensure that the AI’s responses are not only accurate but also up-to-date. By enabling developers to integrate Google Search with Gemini models, offering a promising solution to this persistent problem.

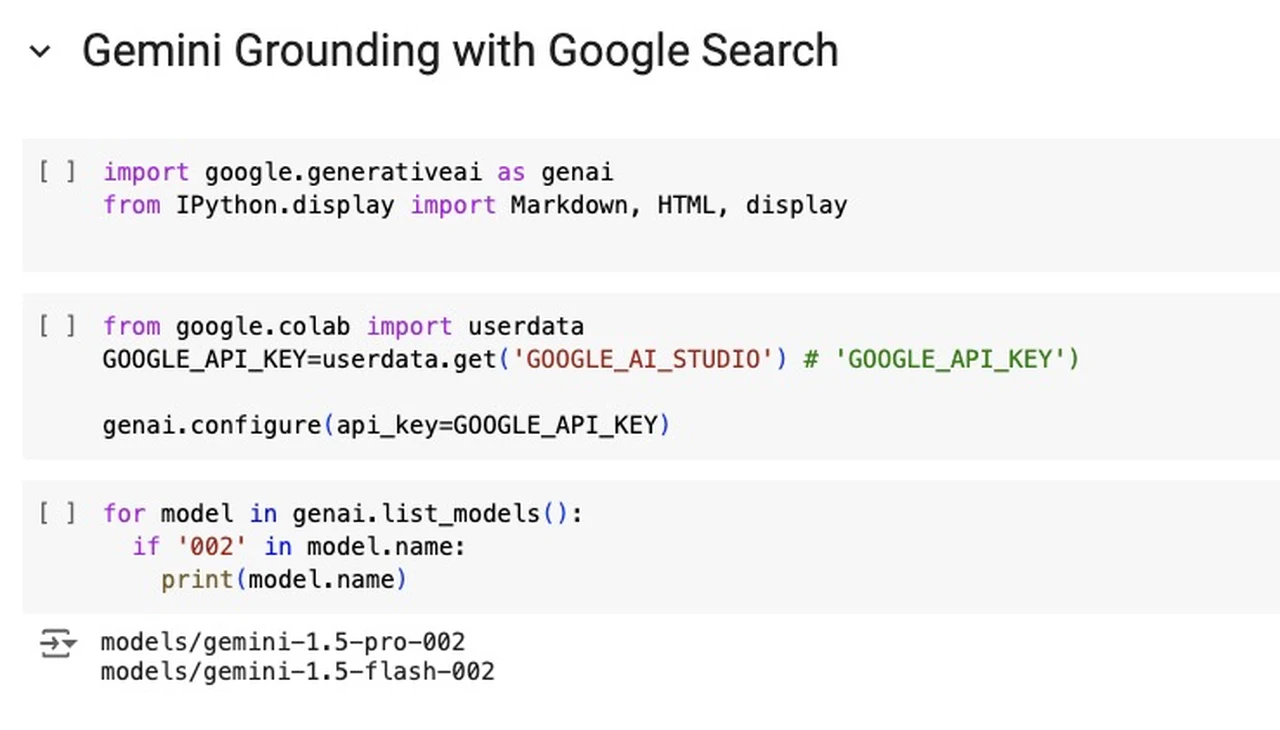

By tapping into real-time search data, this new Grounding feature aims to transform how AI applications retrieve and process information. Through AI Studio and code API access, you can harness Gemini Grounding to enhance the accuracy and trustworthiness of your AI applications, enabling it to provide a more intelligent and responsive digital experience for users.

Tackling the Hallucinations in LLMs

TL;DR Key Takeaways :

- Integrating Google Search with Gemini models significantly improves the accuracy of large language model applications by grounding responses with real-time data, addressing the issue of hallucinations.

- The integration ensures that responses are based on the latest information, which is particularly beneficial in rapidly changing fields like technology, finance, and current events.

- AI Studio and code API access assist the integration process, offering both user-friendly and customizable options for incorporating Google Search into applications.

- Dynamic Retrieval Mode optimizes resource usage by employing Google Search only when necessary, balancing efficiency and precision in response generation.

- Providing citations and sources for information retrieved through Google Search enhances transparency and credibility, allowing users to verify the origin of the data.

The integration of Google Search with Gemini models, directly addresses one of the most persistent challenges in AI: the occurrence of hallucinations, where models generate incorrect or outdated information due to limitations in their training data. By grounding responses with real-time search data, you ensure that the information provided is not only relevant but also up-to-date, marking a new era in AI-powered information retrieval and processing.

Hallucinations in LLM applications have long been a thorn in the side of developers and users alike. These inaccuracies occur when models rely on static datasets that fail to capture recent developments or nuanced information. The integration of Google Search offers a robust solution to this problem, effectively mitigating these inaccuracies by making sure that responses are based on the most current and accurate data available.

Key benefits of addressing hallucinations:

- Improved accuracy in rapidly changing fields

- Enhanced user trust in AI-generated responses

- Reduced risk of misinformation spread

- Increased applicability in real-world scenarios

Harnessing the Power of Real-Time Data

The integration of Google Search with Gemini models provides a powerful mechanism for using real-time data. This feature is particularly valuable in domains where information evolves rapidly, such as technology, finance, and current events. By tapping into the vast and constantly updated repository of information available through Google Search, Gemini models can provide responses that are not only accurate but also reflect the most recent developments in any given field.

Applications benefiting from real-time data integration:

- News aggregation and analysis

- Financial market insights

- Technology trend forecasting

- Real-time event coverage

Combining Gemini Grounding with Google Search

Master Google Search Integration with the help of our in-depth articles and helpful guides.

- Scrape Google for LinkedIn Profiles in Seconds

- How to combine GPT-4 Turbo with Google web browsing

- Google IO 2024 Quick Summary and Key Highlights

- Google Gemini AI Beginners Guide

- Google Assistant vs. Gemini: Which Google AI Wins?

- How to use Google Gemini in Bard to get the best results

- How to use the new AI Google Search with AI Overviews

- Supercharge Your Google Sheets

- Apple Intelligence vs Google Gemini (Video)

- How to Use Perplexity Spaces for Enhanced AI Collaboration

Implementing Grounding with AI Studio and Code API

To harness this new feature, developers have two primary avenues: AI Studio and code API access. AI Studio offers a user-friendly interface that simplifies the integration process, making it accessible even to those with limited coding experience. For developers seeking more control and customization options, the code API access provides the flexibility to tailor the integration to specific application needs and performance requirements.

Optimizing Relevance with Dynamic Retrieval Mode

The Dynamic Retrieval Mode is a sophisticated feature that enhances the efficiency and relevance of Google Search integration. By allowing you to set specific thresholds for when to engage the search function, this mode ensures that external data is only retrieved when truly necessary. This optimization not only conserves computational resources but also maintains the delicate balance between using external data and relying on the model’s inherent knowledge.

Benefits of Dynamic Retrieval Mode:

- Improved response time for queries not requiring search

- Reduced computational overhead

- Enhanced relevance of responses

- Flexibility in balancing model knowledge with external data

Fostering Trust Through Transparency

In an era where information authenticity is paramount, providing citations and sources for data retrieved through Google Search is crucial. This transparency not only enhances the credibility of the AI-generated responses but also enables users to verify information independently. By offering clear pathways to original sources, you build trust with your users and position your application as a reliable source of information.

Personalizing Grounding with Google Cloud Vertex AI

Google Cloud Vertex AI takes the concept of grounding a step further by allowing personalization based on individual or organizational data. This feature allows for the integration of private documents stored in the cloud, creating a highly tailored information retrieval system. The ability to ground responses in personal or proprietary datasets opens up new possibilities for specialized applications across various industries.

Potential applications of personalized grounding:

- Corporate knowledge management systems

- Personalized learning platforms

- Industry-specific research tools

- Customized customer support systems

Navigating the Cost Landscape

While the benefits of integrating Google Search with Gemini models are substantial, it’s important to consider the associated costs. The use of real-time search data may incur additional expenses, which can vary based on the frequency and volume of queries. Conducting a thorough cost-benefit analysis is crucial to determine the optimal level of integration for your specific use case and budget constraints.

Driving Continuous Improvement Through User Feedback

The success and refinement of Google Search integration with Gemini models heavily rely on user engagement and feedback. Encouraging users to share their experiences provides invaluable insights into the feature’s effectiveness and areas for improvement. By fostering a collaborative environment where user input is valued and incorporated, you not only enhance the overall user experience but also drive continuous innovation in AI technology.

By using these advanced features and considering the various aspects of implementation, you can significantly enhance the accuracy, relevance, and trustworthiness of your AI applications. The integration of Google Search with Gemini models represents a major step forward in the evolution of AI, offering new possibilities for creating more intelligent, responsive, and reliable systems.

Media Credit: Sam Witteveen

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.