Have you ever felt like the world of AI is dominated by massive, resource-hungry models that seem out of reach for most practical applications? You’re not alone. For many developers and organizations, the challenge lies in finding a balance between performance and accessibility—something powerful enough to deliver results but efficient enough to work within real-world constraints. That’s where the Mistral Small 3 steps in, offering a refreshing alternative to the “bigger is better” mindset. With its compact 24-billion-parameter design and open source flexibility, this model promises to change the game for anyone looking to harness AI without breaking the bank—or their hardware.

But what makes the Mistral Small 3 truly exciting isn’t just its size or cost-effectiveness—it’s the versatility it brings to the table. Whether you’re building a private chatbot, fine-tuning a model for a niche application, or simply exploring AI on a laptop, this model is designed to meet you where you are. It’s not just about keeping up with the larger players like GPT-4 Mini or LLaMA 3.3 70B; it’s about delivering results that feel just as impactful, but with a fraction of the complexity. If you’ve been searching for an AI solution that’s as practical as it is powerful, you might be interested in this Mistral Small 3 overview by Sam Witteveen.

Mistral Small 3 AI Model

TL;DR Key Takeaways :

- Mistral Small 3 is a 24-billion-parameter open source AI model, balancing efficiency and performance, and offering a cost-effective alternative to larger models like GPT-4 Mini.

- It features an extended context window of up to 128,000 tokens, multilingual support, and excels in tasks requiring precision, such as data analysis and conversational AI.

- Released under the Apache 2 license, it supports both commercial and non-commercial use, promoting transparency and accessibility in AI development.

- The model is optimized for quantization, allowing deployment on devices with limited computational resources, making sure low-latency performance for real-time applications.

- Mistral Small 3 is versatile, suitable for use cases like private chatbots, retrieval-augmented generation, and industry-specific tools, offering flexibility for both cloud and local deployment.

Mistral has unveiled the Mistral Small 3, a 24-billion-parameter open source AI model that redefines the balance between efficiency and versatility in artificial intelligence. Released under the permissive Apache 2 license, this model provides a cost-effective and accessible alternative to larger models like LLaMA 3.3 70B and GPT-4 Mini. With its robust architecture, adaptability, and open source nature, Mistral Small 3 is poised to play a pivotal role in advancing AI development across industries. Mistral Small 3 is designed to deliver high performance while maintaining computational efficiency, making it a standout in the competitive AI landscape. Its unique features include:

- 24 Billion Parameters: The model’s architecture strikes a balance between size and capability, making sure efficient performance without requiring excessive computational resources.

- Extended Context Window: With a 32,000-token context window—expandable to 64,000 or even 128,000 tokens—it can process and generate complex, nuanced outputs, outperforming many larger competitors in handling long-form content.

- Multilingual Proficiency: The model supports a wide range of languages, including Western European languages, Chinese, Japanese, and Korean, making it ideal for global applications such as translation, multilingual content generation, and cross-cultural communication.

These features position Mistral Small 3 as a versatile and powerful tool for developers seeking a model that combines scalability and precision.

Open source Innovation and Accessibility

Mistral Small 3 is released under the Apache 2 license, underscoring its commitment to open source principles. This licensing model allows for both commercial and non-commercial use, allowing developers and organizations to fine-tune, modify, and deploy the model without restrictive limitations. By providing open-weight models, Mistral fosters transparency and accessibility, addressing the growing demand for collaborative innovation in AI development.

The open source nature of Mistral Small 3 enables developers to explore its capabilities and adapt it to specific use cases. This flexibility ensures that the model can be tailored to meet the needs of diverse industries, from healthcare to education and beyond.

New Mistral AI Mini Model

Advance your knowledge in open source AI models by reading more of our detailed content.

- New Qwen-2.5 Max Open Source AI Beats Deepseek and OpenAI

- IBM Unveils Granite 3.0 Open Source AI Models

- Open source AI : DeepSeek R1’s Unmatched Reasoning Power

- DeepSeek Releases Janus Pro AI Image Generator – Open Source

- Microsoft PHI-4: Compact Open Source 14B Parameters AI Model

- NeuralBeagle14-7B new Powerful 7B open source AI model

- How AI is Reshaping Industries: Uncensored Models, Open Source

- Deepseek-R1 vs OpenAI: How Open Source AI is Taking the Lead

- Mistral Pixtral 12B Open Source AI Vision Model Released

- Microsoft Phi-4 : Open source AI Model Redefining Performance

Performance and Practical Applications

Mistral Small 3 is engineered to deliver efficiency and practicality, offering features that cater to a wide range of technical requirements:

- Structured Outputs: The model excels in tasks requiring precision and clarity, such as data analysis, content creation, and conversational AI systems.

- Function Calling: Advanced capabilities like function calling enhance its utility for developers working on complex systems and dynamic applications.

- Optimized for Quantization: The model can be deployed locally on devices with limited computational resources, such as laptops, making sure low-latency performance for real-time applications.

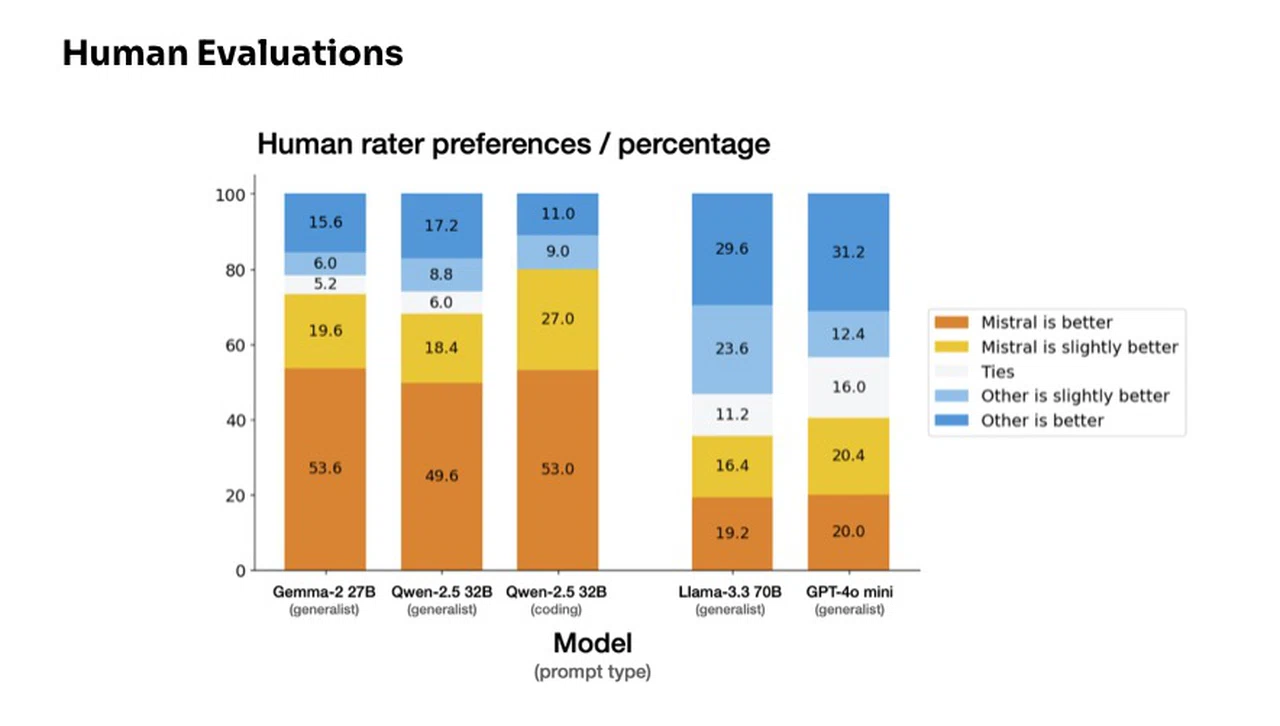

Despite its smaller size compared to some of the largest models, Mistral Small 3 competes effectively in accuracy and reasoning, making it a practical choice for general-purpose tasks. Its ability to operate efficiently on both cloud-based and local systems ensures that it meets the needs of organizations with varying resource constraints.

Versatility Across Applications

Mistral Small 3’s adaptability makes it suitable for a wide range of applications, offering developers the tools to create innovative solutions. Key use cases include:

- Private Chatbots: Build secure, personalized conversational agents tailored to the needs of businesses or individual users.

- Retrieval-Augmented Generation (RAG): Enhance information retrieval and synthesis for knowledge-based systems and research applications.

- Industry-Specific Tools: Fine-tune the model for specialized applications in fields such as healthcare, education, customer service, and creative industries.

The model’s ability to operate seamlessly in both cloud environments and local systems provides organizations with the flexibility to balance cost and performance. This adaptability ensures that Mistral Small 3 can cater to diverse environments, from enterprise-scale solutions to individual projects.

Competitive Strengths and Advantages

Mistral Small 3’s adaptability and efficiency are among its defining strengths, making it a versatile tool for developers and organizations. Key advantages include:

- Reasoning and Storytelling: The model delivers strong performance in generating coherent, structured outputs, making it ideal for applications requiring detailed or succinct responses, such as creative writing or technical documentation.

- Resource Efficiency: Compared to larger models, Mistral Small 3 offers a cost-effective solution without significant compromises in quality, making it an attractive option for organizations looking to optimize their AI investments.

These features position Mistral Small 3 as a compelling alternative to larger, resource-intensive systems, offering a balance between performance and accessibility.

Driving the Future of AI Development

The release of Mistral Small 3 reflects a broader industry trend toward smaller, more efficient AI models capable of handling a wide range of tasks. As developers explore its capabilities, the model is expected to inspire fine-tuned versions tailored for specialized applications, such as legal analysis, creative writing, and domain-specific problem-solving.

This shift highlights the growing preference for compact, cost-effective models, reserving larger, resource-intensive systems for highly specialized needs. By bridging the gap between performance and accessibility, Mistral Small 3 sets a new standard for what smaller models can achieve, paving the way for a future where AI is more adaptable and inclusive than ever before.

Media Credit: Sam Witteveen

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.