Navigating the ever-expanding world of large language models (LLMs) can feel like juggling too many pieces of a puzzle. Each provider has its own quirks—unique APIs, syntax variations, and specific requirements—that can leave developers feeling overwhelmed and bogged down in the details. If you’ve ever wished for a simpler way to manage these complexities, you’re not alone. The good news? There’s a solution in the form of LiteLLM that promises to bring clarity and efficiency to the chaos, allowing you to focus on what truly matters: building impactful applications.

LiteLLM is an open source library designed to unify and streamline how developers interact with multiple LLMs. Imagine having a single, consistent interface that works seamlessly across providers like OpenAI, Anthropic, and Gemini, eliminating the need to constantly adapt to different API styles. LiteLLM not only simplifies your workflow but also opens the door to faster development, easier model switching, and support for diverse use cases—all without compromising on flexibility or functionality. This guide by Trelis Research provides more insight into how you can use LiteLLM to simplify your development workflows.

Why Use a Unified API

TL;DR Key Takeaways :

- LiteLLM provides a unified API for seamless integration with multiple large language models (LLMs) like OpenAI, Anthropic, and Gemini, using a consistent OpenAI-style syntax to simplify workflows and reduce complexity.

- It supports advanced features such as long context handling, tool integration, streaming responses, and image input processing, making it suitable for diverse and complex use cases.

- LiteLLM allows integration with custom API endpoints, allowing compatibility with locally deployed or proprietary models, offering flexibility for cost-effective and privacy-focused deployments.

- The library is easy to install and use, with minimal configuration and a low learning curve, allowing developers to quickly start building applications without extensive setup or documentation review.

- LiteLLM stands out by focusing on lightweight API-level integration rather than abstracting workflows, offering developers direct control, broad compatibility, and enterprise-grade features for large-scale applications.

Developers often encounter challenges when working with inconsistent API designs from different LLM providers. LiteLLM solves this issue by offering a standardized API interface, eliminating the need to learn and adapt to multiple syntax styles. Its OpenAI-style syntax ensures a consistent development experience, regardless of the underlying model. This open source library provides a unified API that standardizes interactions across leading LLM providers, such as OpenAI, Anthropic, and Gemini. By adopting a consistent OpenAI-style syntax, LiteLLM reduces the complexity of working with diverse APIs, accelerates development, and allows effortless switching between models. This approach delivers several key advantages:

- Faster Development: Spend less time adapting to the quirks of individual APIs and more time focusing on building your application.

- Flexibility: Seamlessly switch between models, such as OpenAI’s GPT-4 and Anthropic’s Claude, with minimal code modifications.

- Reduced Complexity: Manage all interactions through a single, standardized interface, simplifying your workflow.

By unifying API interactions, LiteLLM enables developers to work more efficiently, adapt to evolving project requirements, and focus on delivering impactful solutions. Whether your application involves handling long contexts, integrating external tools, or processing image inputs, LiteLLM is designed to address these diverse needs with precision and efficiency.

Streamlined Development for Diverse Applications

LiteLLM is designed to simplify the development process for a wide range of applications. By abstracting the complexities of different APIs, it reduces the overhead of managing unique syntax, token limits, and model-specific parameters. This abstraction enables faster prototyping and deployment, making LiteLLM a versatile choice for projects of all sizes.

Some of the key benefits include:

- Ease of Use: A consistent API design minimizes the learning curve, allowing developers to focus on building features rather than troubleshooting syntax issues.

- Broad Compatibility: Support for various use cases, from text generation and summarization to image processing and tool integration.

- Scalability: Efficiently handle complex applications without being hindered by API-specific limitations.

Whether you’re building a chatbot, summarizing lengthy documents, or integrating external tools, LiteLLM simplifies the process, allowing you to focus on innovation and functionality.

LiteLLM – One Unified API for for all LLMs

Expand your understanding of APIs for LLMs with additional resources from our extensive library of articles.

- Claude 3 API Opus LLM performance tested

- Perplexity Lab pplx-api API for open-source LLMs

- ToolLLM vs ChatGPT vs Gorilla LLM compared and tested

- New Meta Llama 3.2 Open Source Multimodal LLM Launches

- Build your own private personal AI assistant using LocalGPT API

- DeepSeek-v2.5 open source LLM performance tested

- How to Use Ollama, Llama Stack & AgentOps for AI Development

- Microsoft AutoGen lets you build AI Agent frameworks

- How to run uncensored Llama 3 with fast inference on cloud GPUs

Advanced Features for Complex Use Cases

LiteLLM stands out with its robust feature set, designed to meet the demands of modern, complex applications. Its advanced capabilities include:

- Long Context Handling: Manage extensive token limits across models, making sure compatibility with tasks that require large input or output sizes.

- Tool Integration: Use consistent syntax to integrate external tools seamlessly, regardless of the LLM provider.

- Streaming Responses: Enable real-time data processing with OpenAI-style streaming parameters, ideal for dynamic applications.

- Image Input Support: Process image inputs via base64 encoding or URLs, expanding the scope of applications beyond text-based tasks.

These features make LiteLLM a versatile and adaptable solution for developers working on innovative projects, making sure that even the most complex use cases are handled with ease.

Custom Endpoint Integration

LiteLLM extends its functionality beyond compatibility with major LLM providers by supporting custom API endpoints. This feature allows developers to integrate locally deployed models, such as LM Studio, or cloud-hosted solutions like RunPod. As long as the model adheres to OpenAI-style API syntax, LiteLLM can seamlessly interact with it.

This flexibility is particularly valuable for:

- Proprietary Models: Incorporate custom models tailored to your organization’s specific needs.

- Alternative Deployments: Explore cost-effective or privacy-focused deployment options without sacrificing functionality.

With LiteLLM, you can adapt the library to meet unique project requirements while maintaining the simplicity and consistency of its unified API.

Broad Compatibility with Leading Models

LiteLLM supports a wide range of LLMs, including industry leaders like GPT-4, Claude, and Gemini. It also accommodates model-specific requirements, such as Anthropic Claude’s unique syntax or OpenAI’s 01 series. This broad compatibility ensures that developers can select the most suitable model for their application without being constrained by API limitations.

By offering this level of flexibility, LiteLLM enables developers to use the strengths of different models, making sure optimal performance and results for their projects.

Getting Started with LiteLLM

LiteLLM is designed for quick and easy integration into your projects. With a single command—`pip install lightllm`—you can set up the library and start using it immediately. After importing API keys for supported providers, you can begin making requests with minimal configuration.

The intuitive design of LiteLLM ensures:

- Quick Setup: Get started in minutes with straightforward installation and configuration.

- Minimal Learning Curve: Focus on building your application without spending excessive time navigating complex API documentation.

This simplicity makes LiteLLM an accessible and powerful tool for developers of all experience levels, allowing them to quickly harness the capabilities of multiple LLMs.

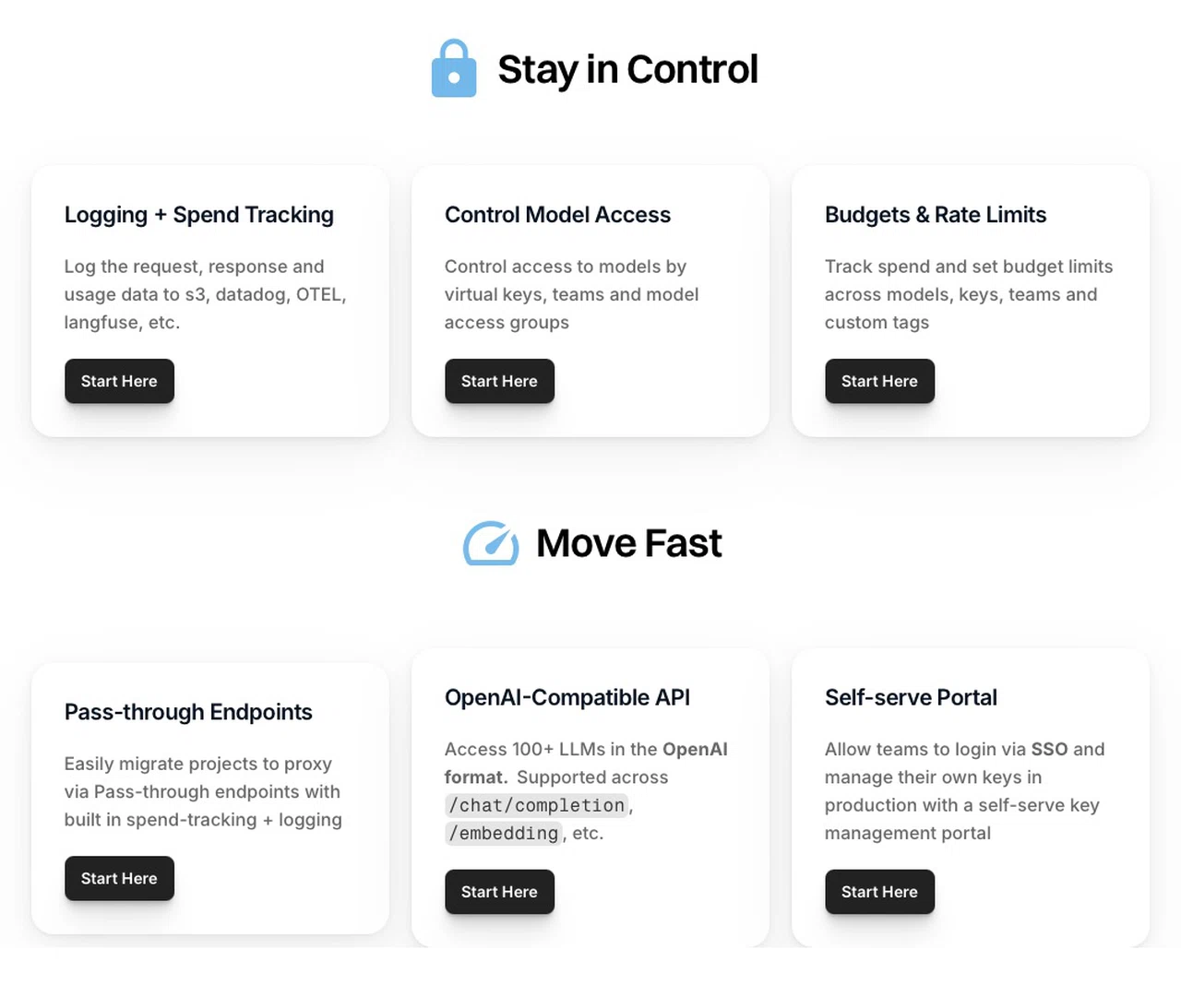

Enterprise-Grade Features and Support

For organizations with complex requirements, LiteLLM offers enterprise-grade features and robust support. These include:

- Advanced Error Handling: Manage edge cases, such as system messages and varying API formats, with ease.

- Long-Term Sustainability: Benefit from ongoing updates and support from a dedicated development team.

These capabilities make LiteLLM a reliable choice for large-scale applications that demand high performance, stability, and reliability.

How LiteLLM Stands Out

LiteLLM distinguishes itself from alternatives like LangChain or DPy by focusing on API-level integration rather than abstracting application workflows. This approach makes it a lightweight and flexible solution for developers who need direct control over API interactions. By prioritizing simplicity and adaptability, LiteLLM caters to a wide range of use cases without introducing unnecessary complexity.

For developers seeking a streamlined, efficient, and versatile tool to manage multiple LLMs, LiteLLM offers a compelling solution that balances functionality with ease of use.

Media Credit: Trelis Research

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.