In a move to expand its AI-optimized infrastructure portfolio, Google has introduced the Cloud TPU v5e, a cost-efficient, versatile, and scalable Cloud TPU. Currently available in preview, the TPU v5e is an example of Google’s commitment to providing cutting-edge technology for AI development now and in the future.

The Cloud TPU v5e is not just a standalone product. It offers seamless integration with Google Kubernetes Engine (GKE), Vertex AI, and leading frameworks such as Pytorch, JAX, and TensorFlow. This integration ensures that developers can leverage the power of the TPU v5e in a variety of environments, enhancing the versatility of their AI projects.

In addition to the TPU v5e, Google is also set to make its A3 VMs, based on NVIDIA H100 GPUs, generally available next month. These VMs are designed to power large-scale AI models, further expanding Google’s AI-optimized infrastructure offerings.

The Cloud TPU v5e is designed for medium- and large-scale training and inference. It delivers up to 2x higher training performance per dollar and up to 2.5x inference performance per dollar for LLMs and gen AI models compared to its predecessor, the Cloud TPU v4. This makes it a cost-effective solution for businesses looking to scale their AI operations.

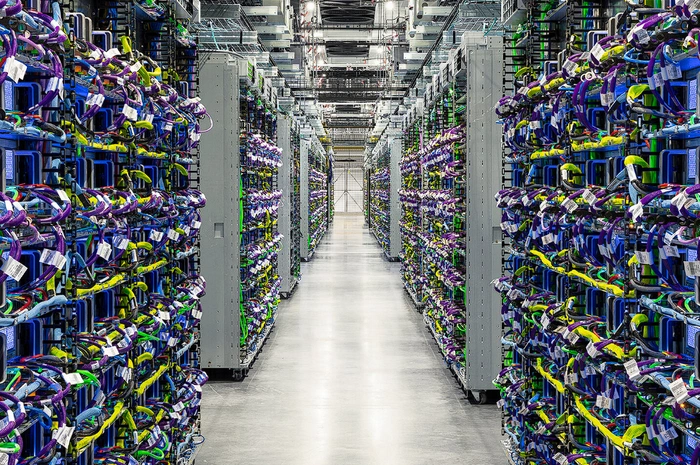

Inside a Google Cloud TPU Data Centre

“Get an inside look at the magic of Google Cloud TPUs, including a rare inside view of the data centers where it all happens. Customers use Cloud TPUs to run some of the world’s largest AI workloads and that power comes from much more than just a chip. In this video, take a look at the components of the TPU system, including data center networking, optical circuit switches, water cooling systems, biometric security verification and more. “

Other articles you may find of interest on the subject of Google and artificial intelligence :

- Learn how to code with Google Bard

- How to use Google Bard on Android

- Google Bard for beginners guide

- Use Google Bard to boost your productivity

The TPU v5e pods allow up to 256 chips to be interconnected, boasting an aggregate bandwidth of more than 400 Tb/s and 100 petaOps of INT8 performance. This high level of interconnectivity and performance makes the TPU v5e a powerful tool for AI development.

Google is also simplifying the operation of TPUs with the general availability of Cloud TPUs in GKE. This move is expected to make it easier for developers to leverage the power of TPUs in their projects.

In another exciting development, Vertex AI now supports training with various frameworks and libraries using Cloud TPU VMs. This means that developers can now use their preferred tools and libraries in conjunction with the power of Cloud TPUs.

Google is also introducing Multislice technology in preview. This innovative technology allows users to scale AI models beyond the boundaries of physical TPU pods, offering unprecedented scalability for AI projects.

The A3 VMs, powered by NVIDIA’s H100 Tensor Core GPUs, are purpose-built to train and serve demanding gen AI workloads and LLMs. They feature dual next-generation 4th Gen Intel Xeon scalable processors, eight NVIDIA H100 GPUs per VM, and 2 TB of host memory.

Google Cloud continues to provide customers with a wide range of AI-optimized compute options across TPUs, GPUs, and CPUs. These options are designed to cater to the training and serving needs of the most compute-intensive models, making Google Cloud a one-stop-shop for AI development.

Source : Google

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.