A gesture and muscle drone controller has been demonstrated by MIT CSAIL researchers, enabling pilots natural gestures and movement from their biceps forearms and triceps, such as clenching fists to be able to fly remote drones. Watch the demonstration video below to learn more about the system which is currently still under development.

“By using a small number of wearable sensors and plug-and-play algorithms, the system aims to start reducing the barrier to casual users interacting with robots. It builds an expandable vocabulary for communicating with a robot assistant or other electronic devices in a more natural way. We look forward to extending this vocabulary to additional scenarios and to evaluating it with more users and robots.”

“From spaceships to Roombas, robots have the potential to be valuable assistants and to extend our capabilities. But it can still be hard to tell them what to do – we’d like to interact with a robot as if we were interacting with another person, but it’s often clumsy to use pre-specified voice/touchscreen commands or to set up elaborate sensors. Allowing robots to understand our nonverbal cues such as gestures with minimal setup or calibration can be an important step towards more pervasive human-robot collaboration.

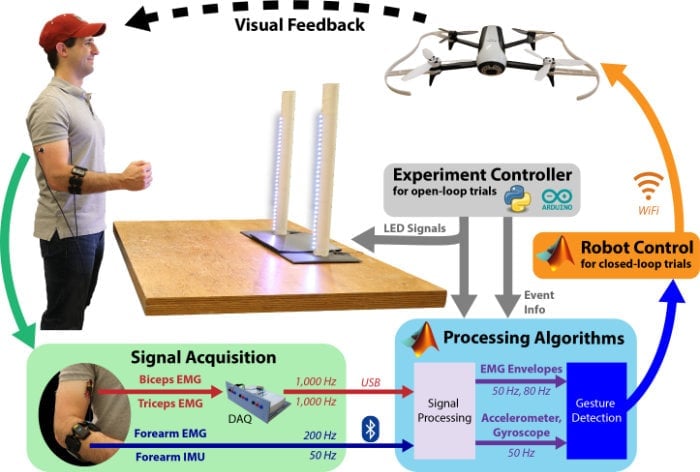

This system, dubbed Conduct-a-Bot, aims to take a step towards these goals by detecting gestures from wearable muscle and motion sensors. A user can make gestures to remotely control a robot by wearing small sensors on their biceps, triceps, and forearm. The current system detects 8 predefined navigational gestures without requiring offline calibration or training data – a new user can simply put on the sensors and start gesturing to remotely pilot a drone.”

Source : MIT : MITCSAIL: Engadget

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.